Using AI to enhance access to UCT Libraries Audiovisual Archives

This year, World Audiovisual Heritage Day coincides with the start of a new project that we are very excited about!

In line with the focus on digital transformation in UCT Libraries, Special Collections is embarking on an experimental project to see how we can incorporate Artificial Intelligence (AI) into the archive. We will also have a team of interns from the UCT Centre for Film and Media Studies helping us use the AI to help create metadata—information that describes the content of an item so that you can discover it, and know enough about it to decide whether you want to use it.

Creating descriptive metadata increases the accessibility of material within the archive. This is especially important for audiovisual (AV) material. If there is no metadata describing the resource, then you must access the resource to discover what the contents are. Selecting the right resources for your research is a much faster process when you can get an idea of what each item is about without having to go through it in full.

To create descriptive metadata for AV material, one could watch every item and extract information along the way. This would need to be done multiple times to ensure nothing was missed. It is easier when there is a transcript, as you can go through a transcript much faster than watching a video. Also, many search engines will search the transcript as well as the accompanying metadata, making it even more likely to show up in relevant searches. Unfortunately, creating transcripts is a long and labour-intensive process.

Well, it used to be. AI makes the process much faster! We consulted with the Centre for Innovation in Learning and Teaching (CILT). Having tested many free tools online for transcription, the CILT designers noted that only paid options would achieve our goals. Cockatoo and Adobe Premier Pro were the preferred transcription software packages. Adobe Premier Pro was less user friendly than Cockatoo—while it can identify different speakers as Speaker 1 and Speaker 2, it does not render well with poor sound quality, which would be common in our context.

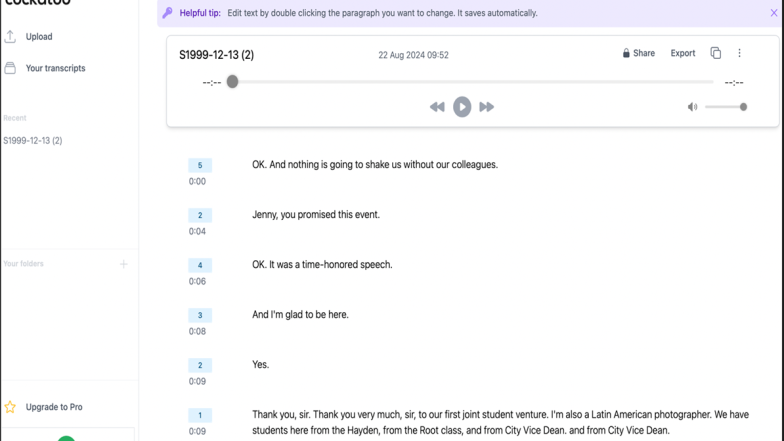

We tested the free version of Cockatoo using a short clip with poor sound quality (31sec, 113MB) from the BVF17—Centre for Media Trust Collection. The transcript was generated as expected. There was only one spelling mistake picked up in the transcript. It registered “City Vice Dean" instead of “City Varsity”. It may have been because the phrase City Varsity is mostly only used in South Africa. Despite the low sound quality, the software was still able to pick up what was being said. This validated the information received from CILT and we selected Cockatoo for the proof-of-concept showing that AI is a useful and important tool in creating descriptive metadata for AV material.

Cockatoo is a simple to use AI transcribing tool which converts speech to text with timecodes, and it is cost effective too. Timecodes are important, as they help identify the precise parts of the footage that researchers are interested in viewing. The student interns will gain experience working with an advanced AI tool as they assist us with this.

Cockatoo has an accuracy of 99% in English, and includes more than 90 languages, though at lower accuracy rates. One of these languages is Afrikaans. Correcting the transcripts in the Cockatoo interface allows the interns to train the AI to increase its accuracy in languages other than English, and where it may have trouble understanding accents. Cockatoo can generate a usable transcript of an hour-long AV file in minutes. This substantially decreases the length of time it takes to create descriptive metadata that will allow researchers to access our material far more widely than at any time previously.

We will use material on our server that was digitised prior to the Jagger Library fire to pilot test the software, such as the Centre for Popular Memory interviews, Centre for Media Trust footage, the newly digitised Simon Bright Collection, and footage or recordings that may have been digitised for a research request. If this phase proves successful, we will hopefully continue to make use of AI to enhance the accessibility of the AV Archive once we gain access to the material that was digitised following the fire and as well as for future acquisitions.